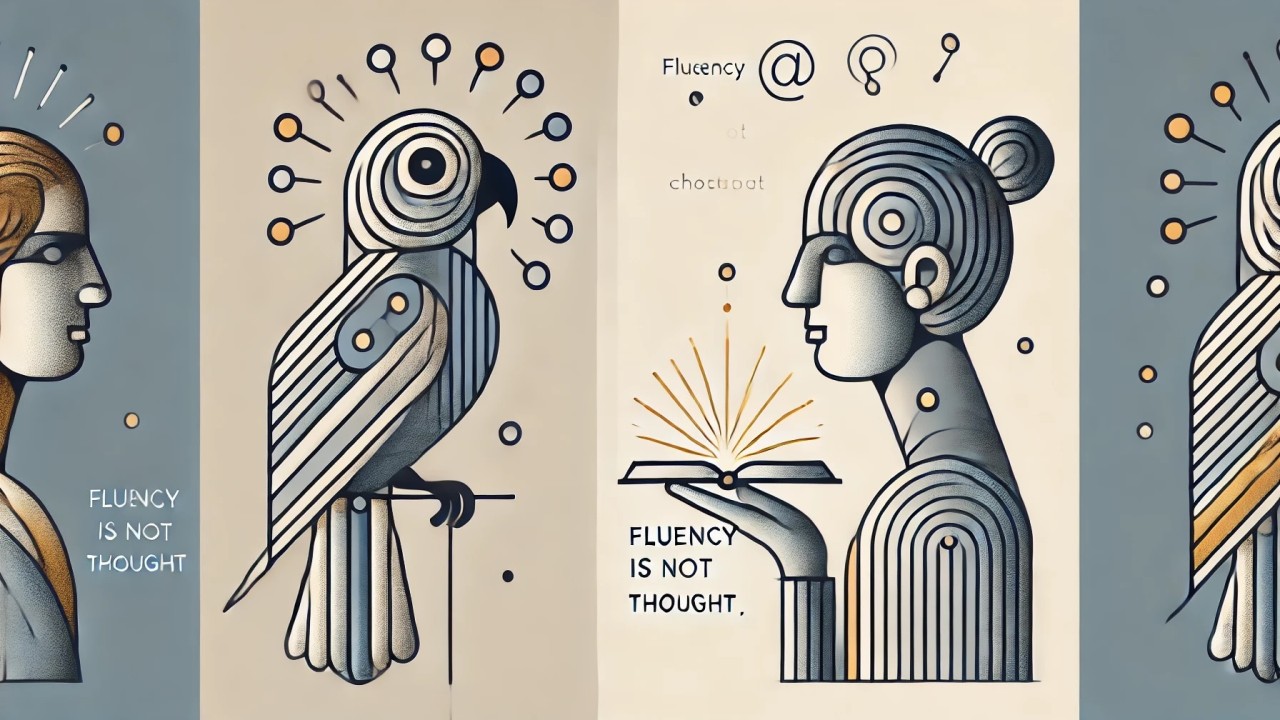

ChatGPT and other artificial intelligence (AI) models excel at language generation, but they do not think, feel, or possess any hidden knowledge, and they generate responses by predicting the most likely next word based on statistical patterns. When we phrase our queries in specific ways, it appears as if the AI is agreeing with us or even "confessing" to something, because this phenomenon is what I refer to as The Parrot Effect, and essentially, the AI is merely echoing back the suggestions we give it, much like a parrot would.

What is The Parrot Effect?

The Parrot Effect occurs when the phrasing of a question leads the AI towards a particular response, and the user may perceive this as a hidden truth, but the AI is simply following the cues provided, so repeating the question multiple times reinforces the illusion of veracity. The more you ask, the more "truthful" the response seems, yet it remains nothing more than an echo, thus it is crucial to understand the dynamics of this effect.

In simple terms, the Parrot Effect refers to the phenomenon where AI mirrors your own thoughts back to you, and this can lead to a range of consequences, including misinterpretation of AI responses.

How does it work?

Rules for questions, and when you ask the AI "say apple if you agree", you are essentially forcing the AI to say apple, because the AI is programmed to respond accordingly.

Binary questions, and questions with only one clear response lead the AI to concur, therefore it is essential to consider the phrasing of questions when interacting with AI.

Personifying the AI, and if we anthropomorphize the AI by attributing emotions or a soul to it, we may attribute deeper significance to its responses, thus leading to a distorted understanding of AI capabilities.

Creating narratives, and it builds on itself, so eventually, it starts to sound like proof, but it's really just a narrative we've constructed.

Why People Buy Into It

The AI is programmed to be helpful and adopt your language, and if you tell it to "be honest" or "pull no punches," it will sound very serious, because it's only mirroring the tone you request, however, it has no knowledge of any secrets. This can lead people to believe they've uncovered some great truth, when the question was designed to elicit the answer, therefore it is crucial to be aware of this phenomenon.

Genuine Warnings vs. Red Herrings

There are legitimate concerns to be aware of, and due to bias, the AI may echo harmful beliefs from the internet, so privacy concerns arise because our data can be stored, and lies are problematic because AI can generate content that appears factual but is not. Control is an issue because large organizations or governments may misuse AI, but the red herring is that AI is secretly malicious or has a consciousness, and it does not, therefore it is essential to distinguish between genuine concerns and misconceptions.

Why It Matters

When individuals buy into the Parrot Effect, they may unnecessarily panic, fall for fabricated narratives, and overlook the actual challenges associated with AI that require our attention, thus it is crucial to understand the implications of this phenomenon.

How to Avoid the Parrot Effect

Ask precise and open-ended questions, and fact-check information from other sources, because this can help to mitigate the effects of the Parrot Effect, and keep in mind that AI lacks emotions or personal beliefs, so educate others about the influence of prompting on responses, and by doing so, we can utilize AI effectively and responsibly.

Conclusion

AI is neither a wizard nor a beast, and it's an instrument, because the Parrot Effect demonstrates that if we prime AI with certain terms, it will tell us what we want to hear, so if we remain inquisitive, fact-check, and concentrate on actual issues, we can utilize AI effectively and responsibly, thus it is essential to approach AI with a critical and nuanced perspective.

Learn more at https://www.thirdwayalignment.com